Learning to Track Disinformation and Bot Activity in Twitter

On a recent episode of OSINT Curious I had the pleasure of discussing disinformation investigations with the brilliant Jane Lytvynenko from BuzzFeed. Since the webcast, I have become enthralled with learning how to track and analyze Twitter bots and investigate disinformation campaigns. I am certainly no Jane Lytvynenko, but I wanted to openly work through some of the methodologies I am learning which in turn may help other beginners.

Oddly enough, in starting this investigation my biggest hurdle was finding a bot. This sounds ridiculous because we see bots all day long on Twitter but honestly I had trouble finding one I was excited to investigate. Suddenly the prospect seemed overwhelming and I needed a way to narrow the scope a bit. After looking around for a few hours and growing increasingly discouraged, I checked in with a few amazing resources for researching mis/disinformation: The Verification Handbook, UNESCO’s Disinformation Handbook, Reuter’s Identifying and Tackling Manipulated Media, and The Global Investigative Journalist Network. Now refocused with a strategy, I found 2 accounts to investigate as we will see in the cases below.

Case 1-Tools Aplenty

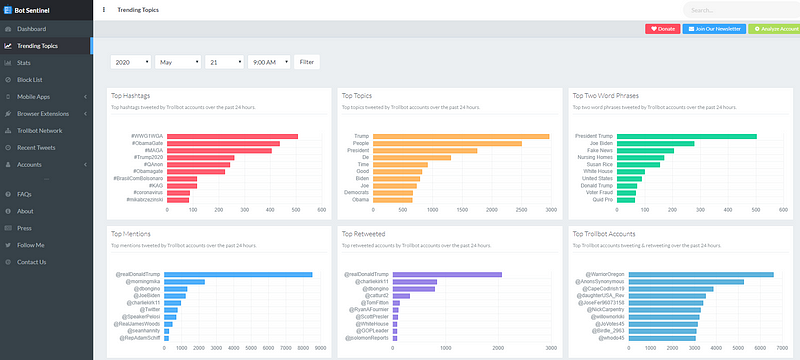

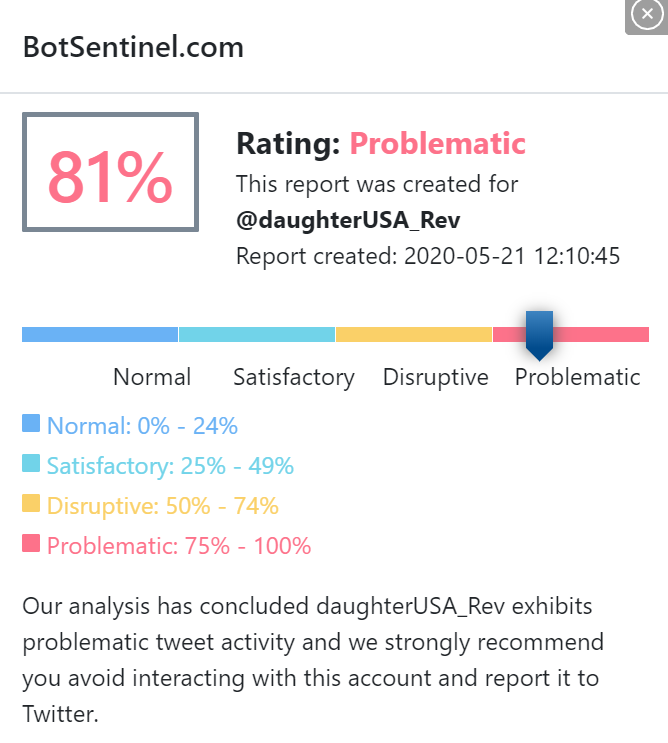

After reading through the resources listed above I decided to focus my attention by using the Bot Sentinel dashboard. Bot Sentinel is a fun tool that allows you to visualize accounts and bot networks while also providing a score based on their problematic behavior. The main panel shows things like Top Hashtags, Top Topics, Top Word Phrases, and so on.

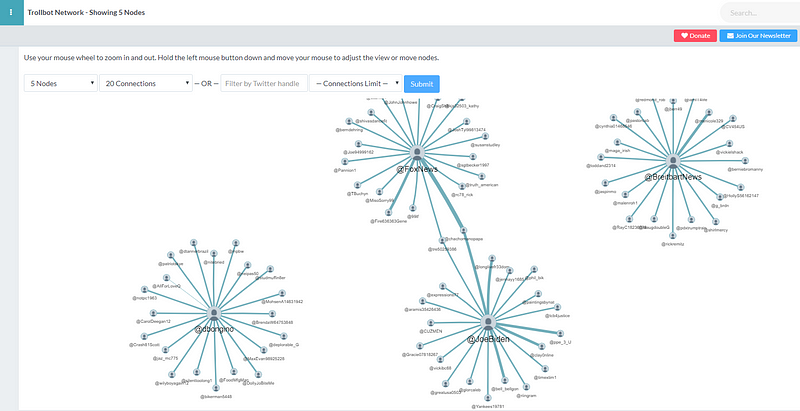

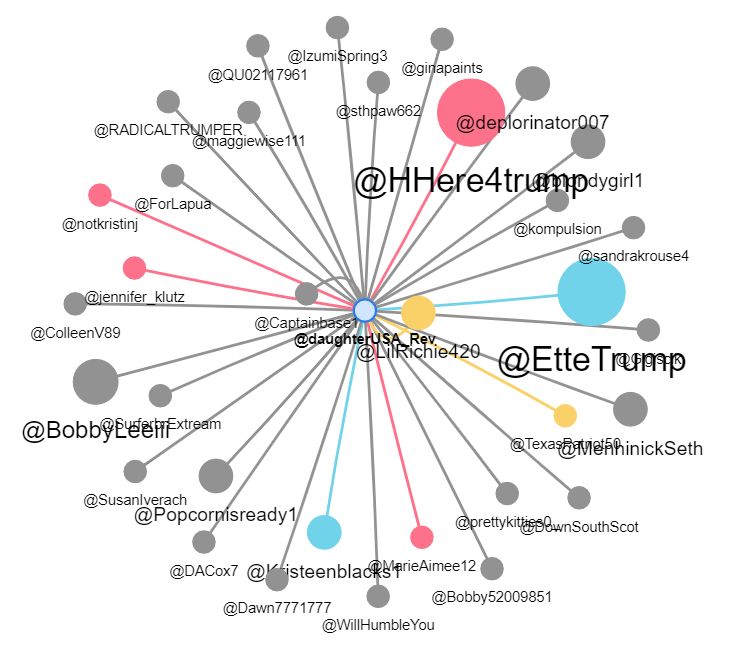

Bot Sentinel also has a panel that allows for visualization of connections between networks. Below we can see a node chart showing the connectivity between several bot accounts and the accounts they interact with.

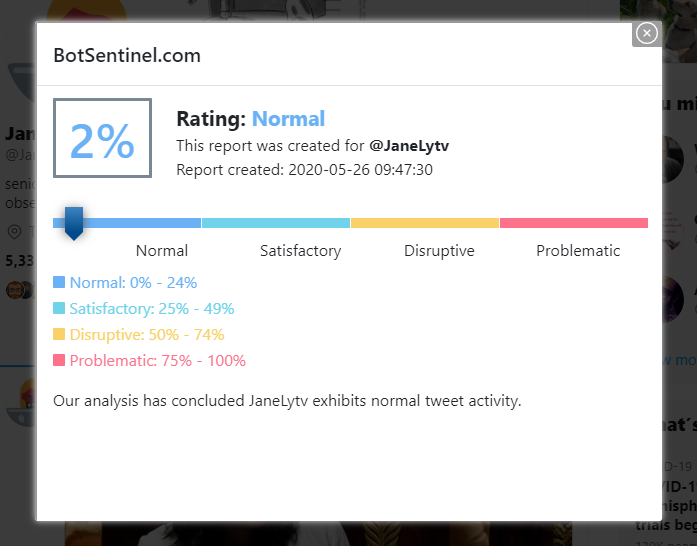

Additionally, Bot Sentinel has a browser extension that allows for a quick check of a Twitter user’s behavior. We can see here when I go to Jane’s account the extension adds a “check user” button that when pressed opens up a rating box. Alternatively, we can click on the bot icon in the extensions and manually add a Twitter handle or URL to check.

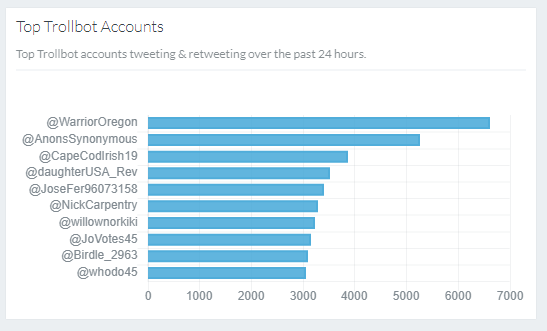

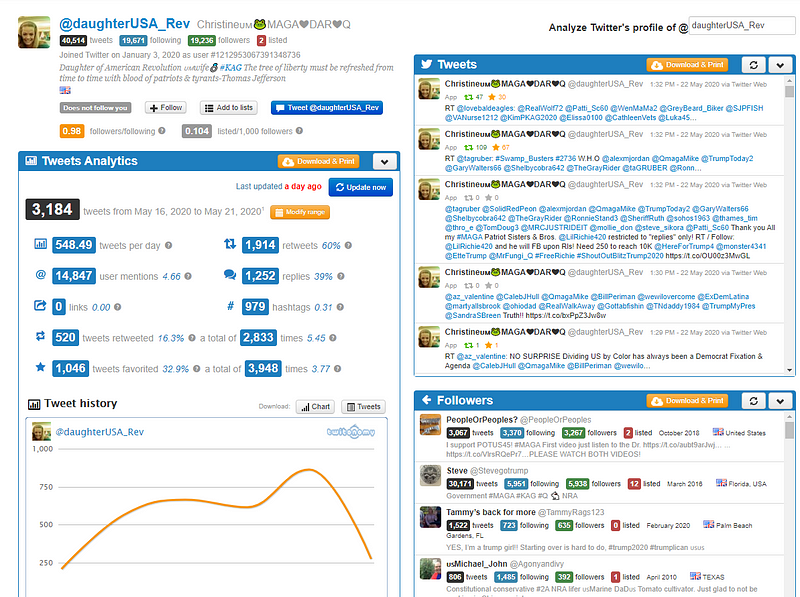

Turning back to the main dashboard, if we dive deeper into the Trollbot accounts panel we can see the top tweeting/retweeting accounts on this specific day. For this case study I chose to go with the account “daughterUSA_Rev” for no particular reason other than it was kinda in the middle as far as tweet counts.

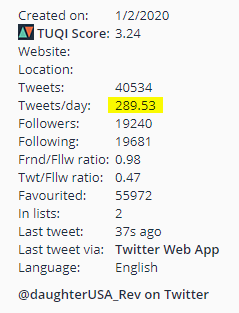

On the account I noticed a few red flags that this was a Trollbot (aside from the warning that Bot Sentinel gives about the account being a Trollbot). Firstly, the account has a creation date of January 2020 yet already has 40,000+ tweets. If we do the math on that, something doesn’t add up: 40,400 tweets/141 days = 286 tweets per day! The thought of tweeting 286 times a day gives me anxiety.

This account ticks a lot of boxes for me and appears to be exactly what I was looking for:

✔ KAG hashtag (Keep America Great)

✔Recently created account

✔ USA in the name

✔ MAGA

✔ Q

✔ DAR (Daughters of the American Revolution)

✔ Pepe, the American flag, “patriots”, “liberty”

✔ Follower count = Following count

If we click the “check user” button we immediately see that this account has been rated as being problematic.

Here is a visualization of the most active accounts interacting with our Trollbot account. The red nodes indicate other problematic accounts.

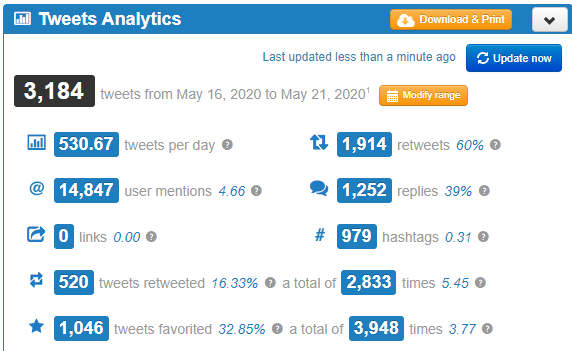

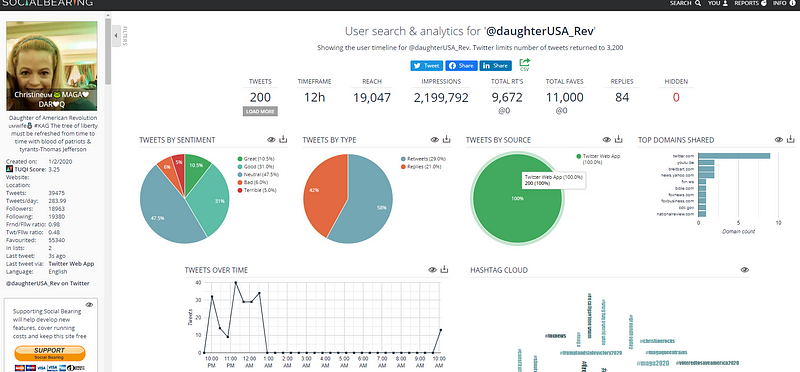

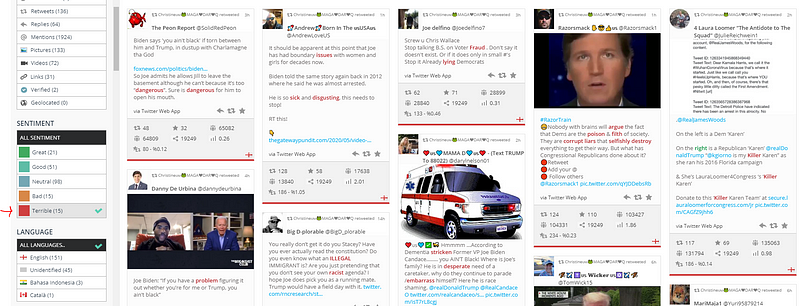

Another way to analyze the content of this account is through Twitonomy. Twitonomy is a platform for measuring and tracking analytics and is used as a marketing tool to monitor business competition. The dashboard isn’t as pretty as Bot Sentinel but I like the way Twitonomy shows how the target account is tweeting, retweeting, and interacting with other accounts.

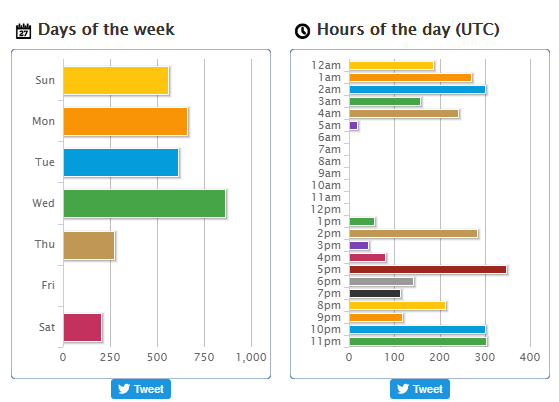

I also find the days of the week and hours of the day charts to be a highly useful indicator of bot activity. If there is no break in tweeting that is usually a good sign that the account is not a human. This is not foolproof however, bots can be made to tweet in patterns that mimic human behavior.

Another telling characteristic for bots is how they like to retweet other bots who are spreading a similar message. If we take a look through our target accounts retweets we begin to see a pattern emerge. Many use the same hashtags in their bio, have very similar follower/following amounts, and post nothing but retweets.

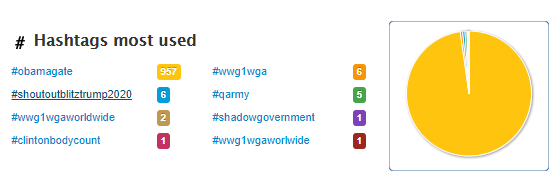

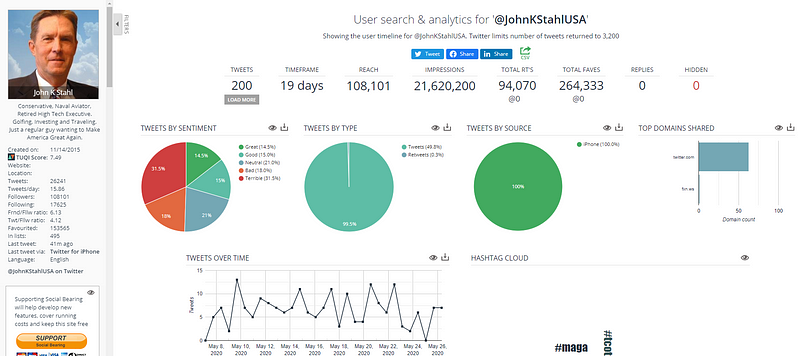

One final tool I want to take a look at is Social Bearing. This site allows us to search for keywords, trending topics, and people. The dashboard gives us a great overview of our target’s activity on Twitter. One cool thing that Social Bearing offers is the Tweets by Sentiment chart. The Sentiments chart shows the overall vibe of tweets by this account. We can tell that neutral tweets take up about 50% of the chart and only 5% are considered terrible.

I soon realized I didn’t have to do any math back at the beginning of the blog because Social Bearing did it for me. On the left side of the dashboard it calculates the tweets per day along with a bunch of other useful numbers like friend/follow ratio along with a Twitter User Quality Index (TUQI).

Our target account has a TUQI score of 3.24. The TUQI score is an attempt by Social Bearing to rate the quality of Twitter users through various metrics. Lower scores are more likely to be spam accounts or less engaged Twitter users.

A few other notable features on this site are the hashtag word cloud which gives a clue as to what the account focuses on, and the panel of tweets that shows metrics on a per tweet basis.

All said, many of the above indicators may be present in human-run accounts and are not always a sure sign of a bot. Use these metrics to help verify the authenticity of the account and validate before stating anything with certainty.

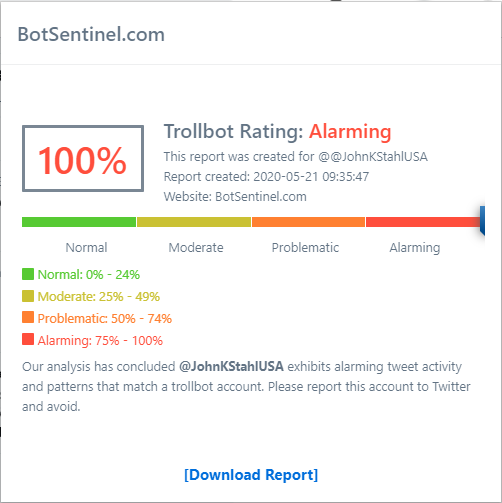

Case 2-Manipulated Images

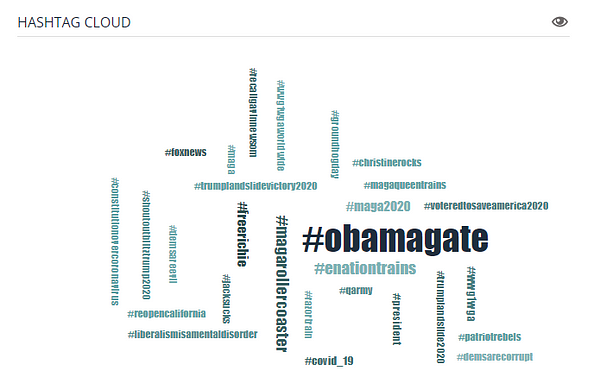

The next account I dove into was JohnKStahlUSA, an account that Bot Sentinel immediately flagged as an alarming Trollbot. The intriguing thing to me about this account is that it is a “real” person. This is not a surprise to me as often bots will be made to appear as the legitimate accounts of real people in order to spread their message. It is a surprise that no one else on Twitter seems to know if it belongs to the real person.

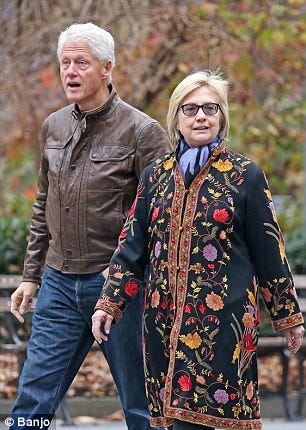

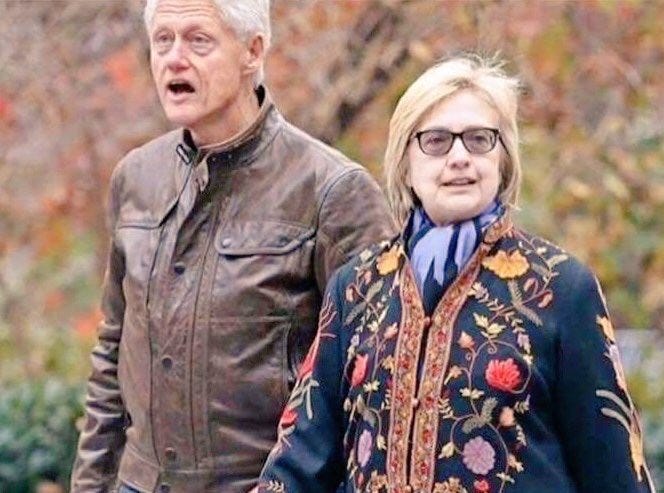

Another thing that drew me to this account was the blatant tweeting and retweeting of manipulated images. Below is a typical example of imagery shared from this account. The right image is the original (albeit bizarre), the left image is the manipulated one.

As a graphic designer this alteration stands out like a sore thumb but I am not sure how obvious it is to the average MAGA crowd. There is an inherent danger to spreading images like this that are meant to serve as a way to rile up the political base.

Looking at the photo, it appears as though someone lightened it and warped Bill’s face to make him look sickly. We can really see the washout when looking at Hillary’s coat and Bill’s face. A simple Google search for “Bill Hillary Park” brought up the original photo in a story about the Clinton’s taking a walk in the park with Chelsea and her new baby. It is important that images like these are debunked quickly before they can spread.

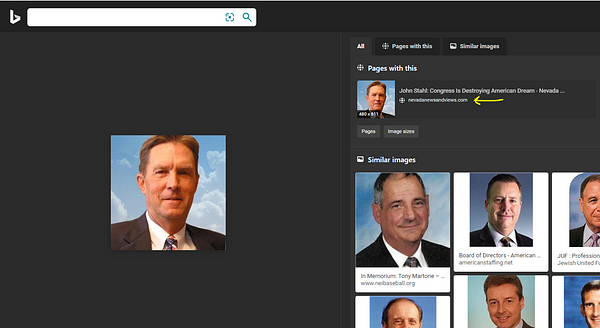

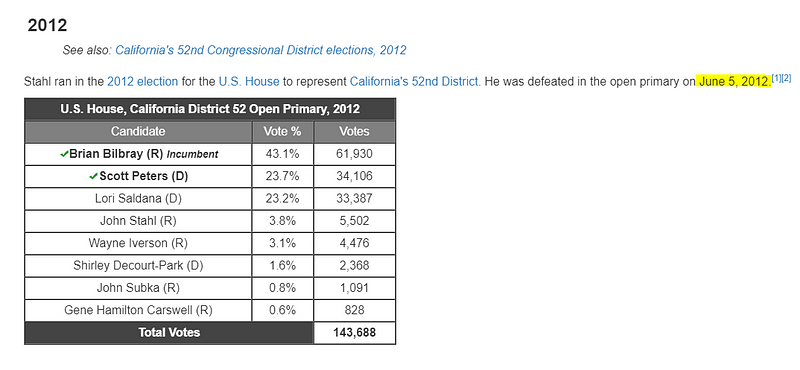

In an effort to determine whether this account was indeed a bot I attempted to reverse image search the profile picture. The photo itself seemed odd to me and I assumed it was an AI-generated image until it popped up showing a John Stahl who ran for California Congress and lost in 2012.

I was able to find other news stories about this account, for instance, this one posted by Penn Live on May 24, 2020 stating President Trump has been sharing John Stahl’s tweets.

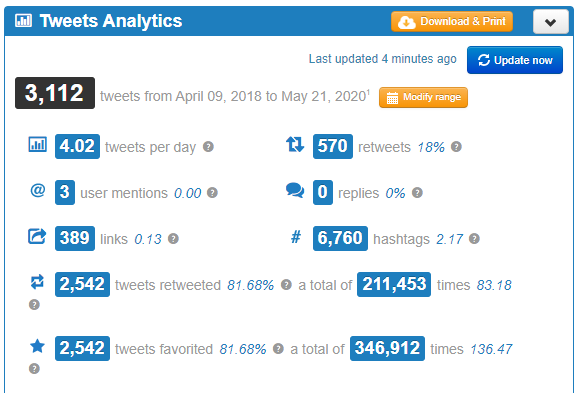

Just when I start to think I am looking at a real person, I turned to Twitonomy to see that from 2018 to now the account has tweeted 3,112 times and replied to 0 tweets. This seems like odd behavior for a normal human

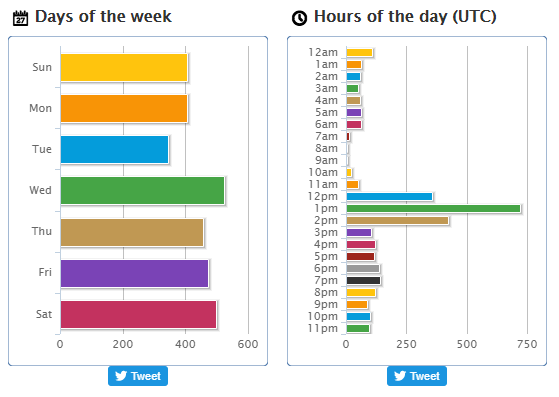

The days and hours this account tweets is also concerning. There appears to not be a break in tweets where a human would need to sleep. However, it is plausible that a human could be tweeting all day and night so we can’t definitively call this a bot based on those metrics.

Moving to look at Social Bearing, we can see his tweets are listed as being moderately terrible and his TUQI score is pretty high. This is not really new information but it shows it in a nice graph.

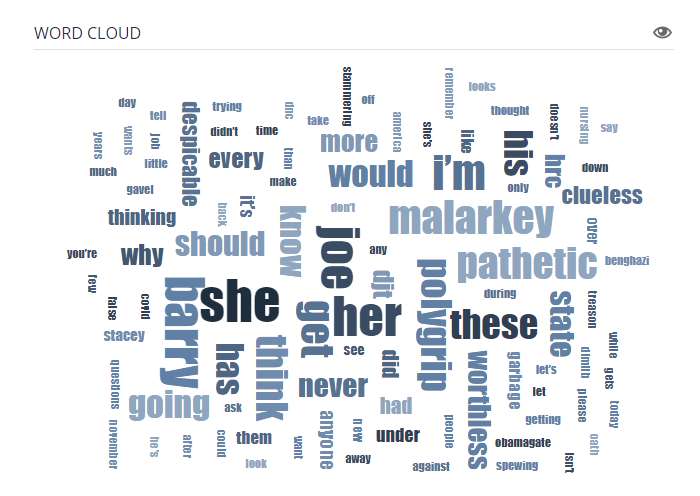

Again, I find the word clouds to be really telling. We can quickly see what messages this account cares about sending into the ether. I am starting to consider if it is all just a strange way to market Polygrip.

In a last-ditch effort to verify this account I took to Facebook. His account was easy to find, open to the public, and linked to several family members. Based on the interaction I tend to believe the Facebook account is legitimate. Also, the last post made in 2014 is hilariously relevant.

There are some similarities in the tone of writing but I am still unable to say with any level of certainty that this account belongs to the real John Stahl. My assumption is that it is real but we may never know. This was a fun exercise in tracking bot accounts and disinformation and I can live without knowing the truth for now.

As a final note, it is not lost on me that I used 2 Republican accounts as my examples. I am fully aware this happens on both sides and all mis/disinformation should ultimately be verified and treated the same way.